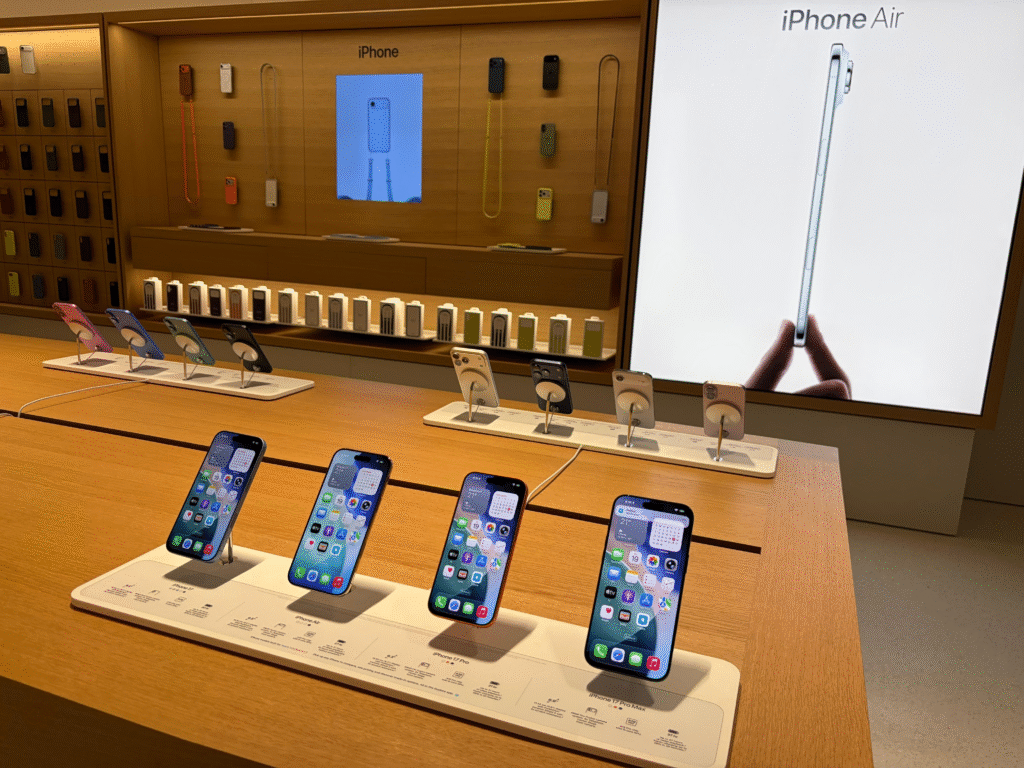

Every year, Apple rolls out exciting deals during the festive season in India, and 2025 is no exception. This year, Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more has become the talk of the town, giving customers a golden opportunity to upgrade their devices at attractive prices.

With Diwali and other celebrations around the corner, Apple’s offers are designed to make premium technology more accessible while adding festive cheer to shopping.

In this TazaJunction.com article, we’ll break down the details of these offers, the products included, and why this year’s festive campaign is one of Apple’s most rewarding yet.

Table of Contents

Why Apple’s Festive Offers Matter?

Apple has steadily expanded its presence in India, opening flagship stores, strengthening online sales, and offering localized services.

The festive season is a crucial time for the brand, as it aligns with India’s biggest shopping period. By launching Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more, the company is not only boosting sales but also deepening its connection with Indian consumers.

For many buyers, Apple products are aspirational. These offers bridge the gap between desire and affordability, making it easier for customers to own the latest iPhones, Apple Watches, iPads, and Macs.

The Highlight: Rs 10,000 Instant Cashback

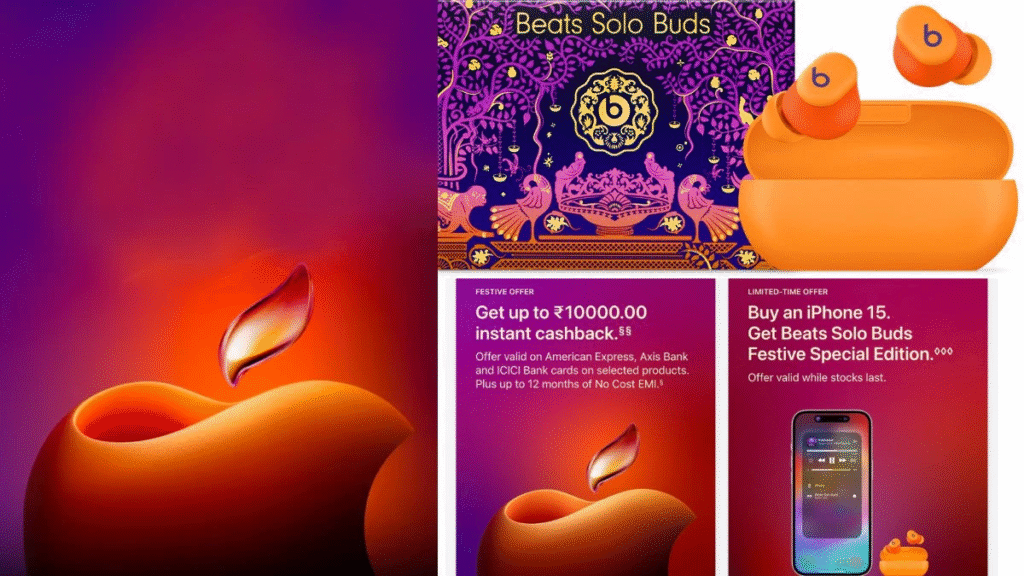

The centerpiece of Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more is the instant cashback available on select products.

Customers using eligible bank credit cards, such as American Express, ICICI Bank, and Axis Bank, can enjoy up to Rs 10,000 off instantly at checkout.

This cashback applies across Apple’s product lineup, including:

- iPhones: The latest iPhone 17 series and even select iPhone 16 models.

- Apple Watches: Series 11, SE 3, and the rugged Apple Watch Ultra 3.

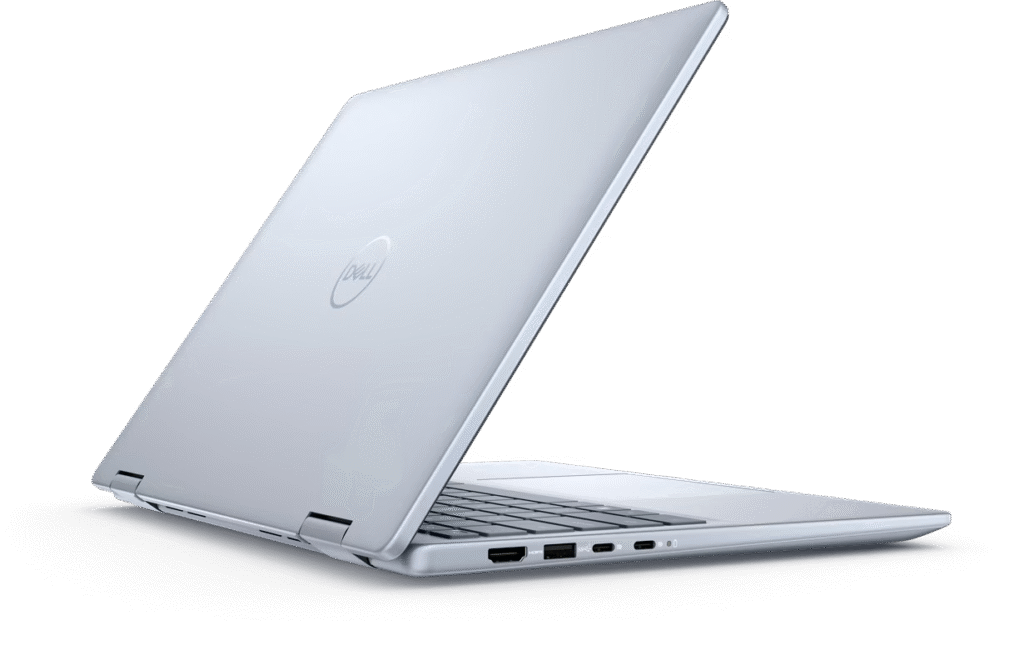

- MacBooks: MacBook Air and MacBook Pro models with M4 and M3 chips.

- iPads: iPad Air, iPad Pro, and iPad Mini.

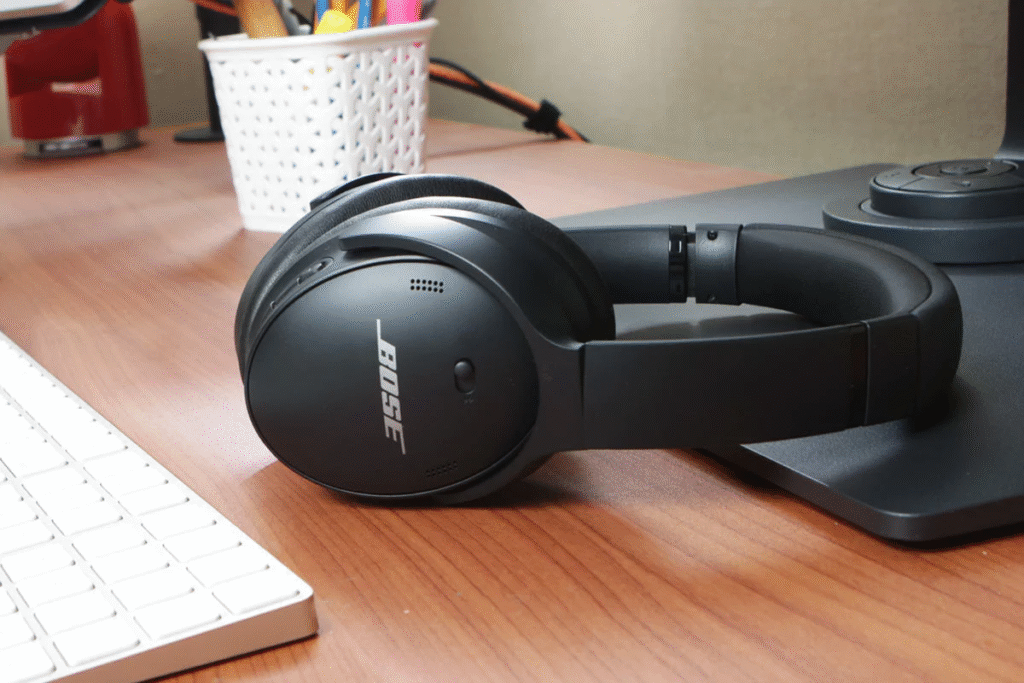

- AirPods and Accessories: AirPods Pro 3, AirPods Max, and Apple Pencils.

The Rs 10,000 cashback is especially attractive for high-value purchases like MacBooks, while iPhones and Watches also come with significant savings.

No-Cost EMI Options

Alongside cashback, Apple is offering no-cost EMI plans of up to 12 months with leading banks. This makes premium devices more affordable by spreading payments without additional interest.

For example, customers can buy an iPhone 17 Pro and pay in easy monthly installments while still enjoying the cashback benefit.

This combination of cashback and EMI ensures that Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more caters to both budget-conscious buyers and those looking for flexible payment options.

Trade-In Benefits

Apple’s Trade-In program is another highlight of the festive campaign. Customers can exchange eligible smartphones, Macs, or iPads for credit toward new purchases. Depending on the device, trade-in values can go as high as Rs 64,000.

When combined with Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more, trade-in makes upgrading even more cost-effective. For instance, trading in an older iPhone and availing cashback on a new iPhone 17 could result in massive savings.

Free Personalization and Setup

Apple is also offering free personalization services during the festive season. Customers can engrave AirPods, iPads, or Apple Pencils with names, initials, or emojis in multiple languages. This makes gifting more personal and festive.

Additionally, Apple provides free online personal sessions for device setup, ensuring that new users can get the most out of their purchases. These value-added services enhance the overall shopping experience.

Exclusive Festive Sessions

To celebrate Diwali, Apple is hosting exclusive “Today at Apple” sessions in its retail stores. These workshops, led by creative professionals, focus on festival-specific photography and videography tips using iPhones.

This initiative shows that Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more is not just about discounts—it’s about creating memorable experiences for customers.

Product-Wise Breakdown of Offers

iPhones

- Up to Rs 5,000 cashback on iPhone 17 and iPhone 17 Pro.

- Trade-in options for older models.

- Free 3-month subscriptions to Apple Music, Apple TV+, and Apple Arcade.

Apple Watches

- Up to Rs 6,000 cashback on Apple Watch Series 11 and Ultra 3.

- No-cost EMI for up to 12 months.

- Advanced health features like hypertension tracking and sleep apnea detection make these watches even more appealing.

MacBooks

- Rs 10,000 cashback on MacBook Air and MacBook Pro models.

- Perfect for students, professionals, and creators.

- Bundled with 3 months of Apple TV+ and Arcade.

iPads

- Up to Rs 4,000 cashback on select iPads.

- Great for students and professionals looking for portability.

AirPods and Accessories

- Rs 4,000 cashback on AirPods Pro 3.

- Free engraving options.

- Complimentary Apple Music subscription for 3 months.

Why This Year’s Offers Stand Out?

While Apple has offered festive deals in the past, Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more stands out for its scale and inclusivity.

Unlike previous years, the offers extend across nearly the entire product lineup, ensuring that every customer finds something valuable.

The combination of cashback, EMI, trade-in, personalization, and exclusive sessions makes this year’s campaign one of Apple’s most comprehensive.

How to Avail the Offers?

Customers can access these offers through:

- Apple’s official online store.

- The Apple Store app.

- Apple’s retail stores in Delhi, Mumbai, Bengaluru, and Pune.

Flexible delivery options, including express delivery and in-store pickup, make shopping convenient.

Consumer Reactions

The announcement of Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more has generated excitement among Indian consumers.

Social media is buzzing with discussions about the best deals, with many praising Apple for making its premium devices more affordable.

For students, professionals, and families, these offers present the perfect opportunity to upgrade devices ahead of the festive season.

Long-Term Impact

These offers are not just about short-term sales. By making devices more accessible, Apple is expanding its user base in India. More iPhone and Mac users mean greater adoption of Apple services like iCloud, Apple Music, and Apple TV+.

The success of Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more could also encourage Apple to roll out similar campaigns in the future, further strengthening its presence in India.

Conclusion

The festive season is the best time to invest in new technology, and Apple has made it even more rewarding this year. With Apple festive offers 2025: Rs 10,000 instant cash back on iPhones, Watches and more, customers can enjoy premium devices at unbeatable prices.

From cashback and EMI options to trade-in benefits and free personalization, Apple has crafted a campaign that combines value with experience.

Whether you’re upgrading your iPhone, buying a MacBook for work, or gifting an Apple Watch to a loved one, these offers ensure that you get the best deal possible.

As India gears up for Diwali and beyond, Apple’s festive offers are set to light up celebrations with innovation, savings, and joy.